How Modal built a data cloud from the ground up

If you’re looking for a place to run an open source model like Llama 4 or Stable Diffusion, you’ve got a lot of options these days. Together, Fireworks, Replicate, Fal…the list goes on.

Most of these platforms work roughly the same way. They upload the latest versions of state of the art models, do some engineering work to make the models run nicely in the cloud, and then release them as dedicated endpoints.

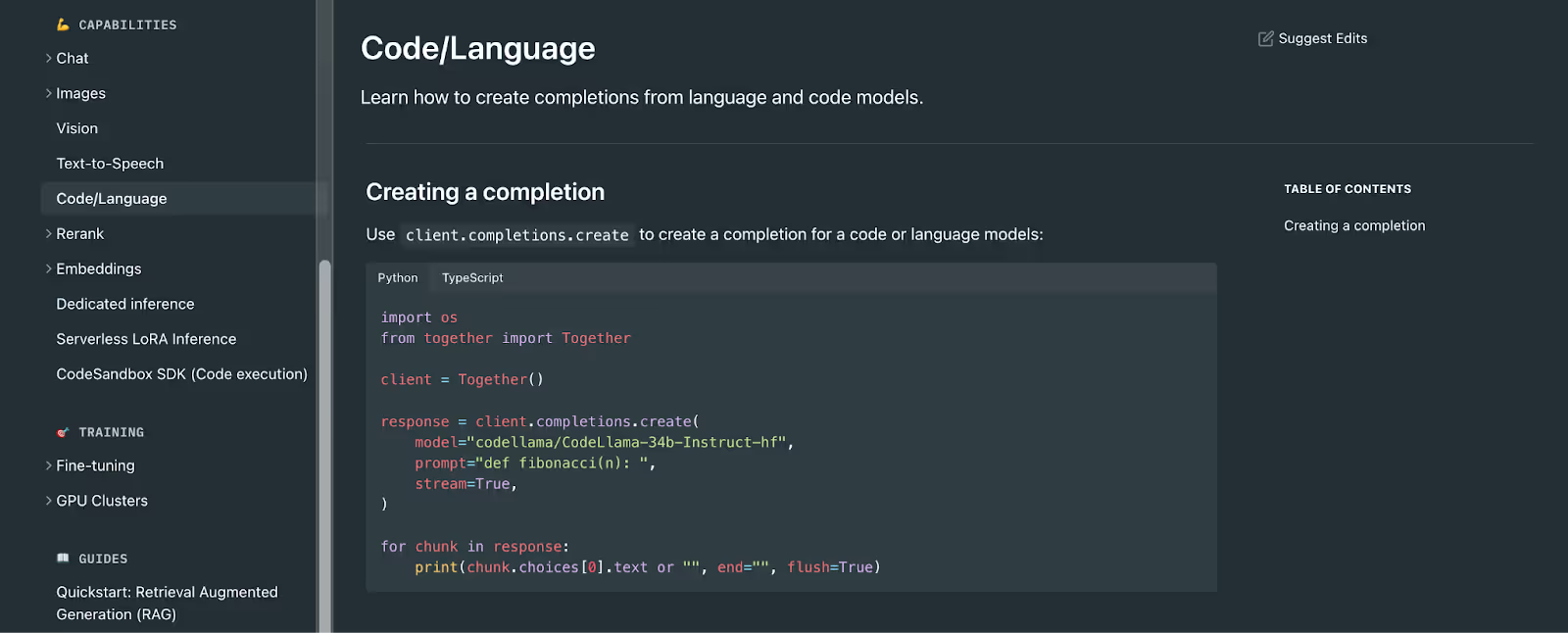

Take a look at Together’s docs, for example. The quickstart has you instantiate the Together client, and then use their chat method to prompt a model of your choice. Docs are organized by what models can do, like generate code, parse images, or chat with a customer.

Fireworks is more of the same:

As well as Replicate:

But there’s one odd child in the bunch.

Like the other companies listed here, this particular platform also helps you deploy and run AI in the cloud, with recipes for everything from Stable Diffusion to the latest Llama model.

But take a look at their documentation, and there’s something strange going on…

Custom container images? Dicts and queues? Scheduling and cron jobs?

There are no model endpoints; you need to upload and use them yourself. There are no simple APIs for running chat or code completions; instead there are decorators to automatically run your code in the cloud. There’s persistent storage, code sandboxes, integrations with S3 and Datadog… what’s going on here?

Let me introduce you to Modal, the most interesting place in the world to run your AI workloads. They’ve taken a completely different approach than everyone else in this space. They built an entire container runtime from scratch. A new file system (two, actually). A framework for running workloads across multiple clouds, all abstracted from the user with a simple decorator UX. And they’re betting that this lower level, more flexible, almost cloud-like system is what AI engineers are going to really want.

This read is going to go behind the scenes of how Modal built and scaled a data cloud from scratch, from our front row seats as their first investor and partner. Based on extensive interviews with the team, we’re going to cover a few topics in technical detail:

- Why the product is so unique: Modal didn’t start as an AI company

- How (and why) Modal built their own container runtime and orchestrator

- How Modal built a multi-cloud routing system for GPU capacity

- Their unique decorator-based developer experience

Let's get into it.

The AI cloud that was never supposed to be an AI cloud

One reason that Modal is so structurally different from all other AI clouds is that Modal is not an AI cloud. At least not by design.

Modal starts with Erik Bernhardsson. You might know him from his Twitter. He’s spent his career building and managing data systems, starting with the recommendations system at Spotify, which no doubt you’ve probably used. While at Spotify he open sourced Luigi, a Python framework for building pipelines of batch jobs a la Airflow. They win my award for favorite OSS logo:

Though Spotify moved away from Luigi in 2022, it still looms large for OG data engineers as one of the first frameworks built for them in a world where they didn’t get much attention.

Throughout his time at Spotify, and later as the CTO of a highly data intensive mortgage company, Erik was thinking about something that a lot of data teams thought about: it’s too hard to work with infrastructure. The feedback loops on backend engineering, and certainly on frontend, were much faster. You can think of all of the feedback opportunities during writing code as a sort of nested for loops:

If your code doesn’t compile, you’ll know right away. Same thing with failing a unit test. Push it to CI/CD, and it might take a few minutes to an hour to know if it fails. Once it goes live, it could take a few hours for a bug report to come in or get paged. Everything happens quickly, which for the most part keeps engineers focused, productive, and chasing that elusive flow state.

Data teams did not have this quick feedback cycle luxury. If you want to deploy a cron job that runs every night at 12…well, you’d need to wait all the way until 12, and then debug it once it fails because you forgot a semicolon in the YAML. Productionizing jobs, scaling out, scheduling things, and figuring out how to work with GPUs all just seemed to take longer than it did for other engineering teams. And these long cycles aren’t just bad for productivity: for data engineers like Erik, they took the joy out of writing code in the first place.

So this is how Modal started: as a general purpose data cloud, not an AI inference engine. Erik’s goal was to compress the feedback loops for data teams. How do you do that?

One way is to move concerns from outer loops into inner loops. Infrastructure happens in everyone’s outer-most loops: things break once you deploy them in ways that they didn’t break locally. And these outer loops of infrastructure are slow. It takes time to push code to the cloud and time for it to start up and run.

Erik’s idea, then, was to inject infrastructure into the local development flow. If you could write code locally, put it into a container, and run that container in the cloud, all in a few seconds, you could maintain the local feedback loops and make them even better.

1# Modal's system today: write your code, wrap it in a decorator (@app), and it runs in the cloud in seconds

2

3app = modal.App(name="link-scraper")

4

5@app.function()

6def get_links(url):

7 ...

8

9@app.local_entrypoint()

10def main(url):

11 links = get_links.remote(url)

12 print(links)Easy in theory, except for one major problem: none of the systems we have to run containers today are within an order of magnitude of the speed necessary to make this happen.

And so Modal set out to build a container runtime from scratch.

Building a container runtime and filesystem from the ground up

If the goal was to be able to take local code, upload it to a container in the cloud, and run it, all within a few seconds so as not to break the feedback loop, there was a lot of work to do.

For one, just pulling down a Docker image usually takes a minute or more. Docker images are often pretty large: standard Linux ones are usually 1GB or so (some smaller), but once you start putting in CUDA, Torch, etc. they can balloon into 10GB+. These larger images can take several minutes to pull. How do you optimize this and shrink it down to a second or two?

Proof of concept: running a container using runc and NFS

The proof of concept that led to Modal’s current solution started with runc, which you could call one of Docker’s most important primitives. It has one purpose: you point it at a filesystem and it spawns and runs a container on it. It doesn’t have any of the utilities to pull or push images, and doesn’t include the Docker daemon. So it’s small, fast, and a great building block for illegally fast containers.

Then there’s NFS, a distributed file system built in the 80’s that allows a client (or server) to access remote files as if they were local. If you put container images on the network already (a fully unpacked filesystem), you can point runc at them and tell it to start a container. runc doesn’t know if the image is local or remote, it just sees a filesystem and starts a container.

Believe it or not this is actually a minimum viable container service! It’s a way to start a container without needing to actually pull the image from the network.

The Modal container runtime today

To get from this proof of concept to a working product though, Modal had to go a lot further. The current Modal product uses one of two (!) custom filesystems they built, specifically for container images.

Though useful for a POC, runc (and frankly Docker) has a number of system vulnerabilities that makes it totally unfit for a product like Modal where users are running arbitrary code. Today’s Modal product runs on gVisor, a highly secure container runtime that isolates the Linux host from the actual container. Modal was the first company to try to run gVisor on a machine with GPUs (as far as I’m aware), and at the time GPU support was highly experimental; over the years they’ve made a bunch of contributions to help make gVisor with GPUs more production ready.

Caching files locally on SSD

The problem with this MVP is that it was now way too slow to start the container. Python does file system operations sequentially, and every call to NFS takes a few milliseconds, all adding up to an 8-10 second container start time. To get latency down to the original goal (a couple seconds), these files (or at least most of them) need to be read from SSD, or even better, the Linux Page Cache.

The solution, unsurprisingly, is caching, but not in the way you might think. Obviously if we need to read the same image twice, we can cache that. But even different images share a lot of the same files.

These are just a few of the commonly run images on Modal – some of them share >50% of the same files!

To take advantage of this, solve the caching problem, and thus the latency problem, Modal built their own filesystem, which Erik would tell you is less daunting than you might think. The first version was in Python and then ported into Rust to make it faster. It implements the basic FUSE operations like open, read, release, and a few more.

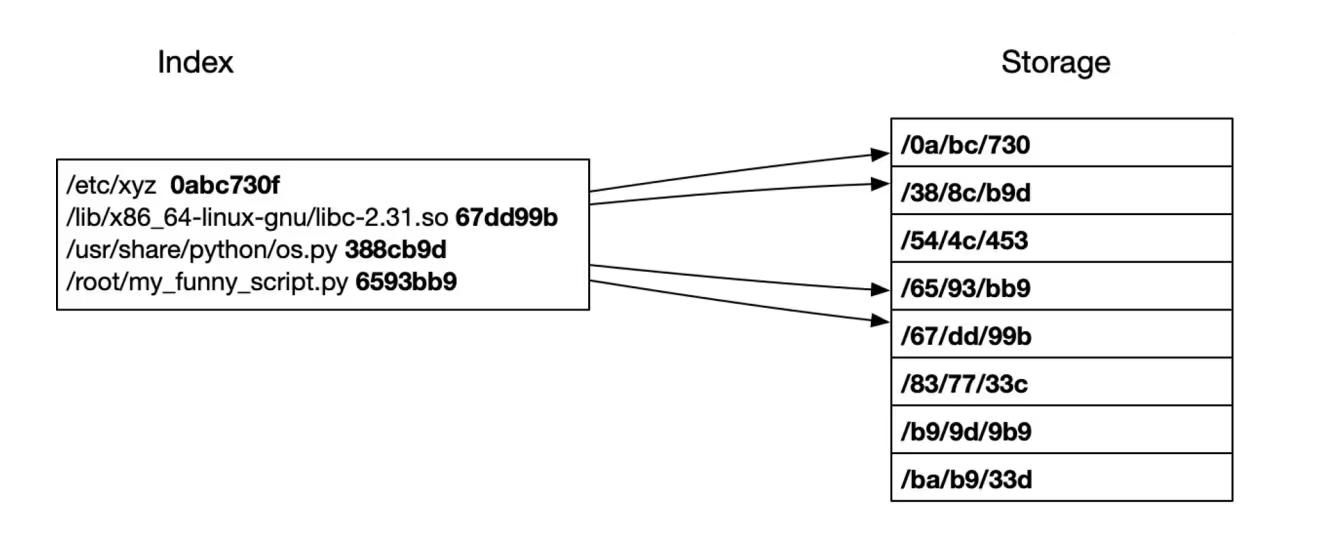

The secret sauce of Modal’s filesystem is caching using content-addressing. They hash the content of each file, and then use the hash as the location of the file. This way, you only need to store each file once, even if it’s on 1,000 different images. Then these hashes get stored in an index in memory.

The goal is to have as many files as possible that the image needs already cached on the machine. When reading a file, they look up the hash in memory: if it’s already on local disk, great. If not, then the system fetches it from the network (blob storage) and caches it locally so it’s stored for next time.

Modal is still working on optimizations here, like zonal caching: files stored in multiple zones so that if there’s a miss, and you need a network trip, files are as close as possible to the machine the container is on.

Memory snapshotting

Even with Modal’s homegrown filesystem and container runtime, cold start times can still be on the longer side the first time you run your code. Earlier this year though, they released a memory snapshotting feature that brings most cold starts down to <3s, even with large dependencies like Torch.

The idea is based on a Linux utility developed back in 1999 (seeing a pattern here?) called CRIU, or checkpoint/restore in userspace. It allows you to snapshot a Linux container and restore it later, even on a different machine. To make sure their system doesn’t keep gVisor waiting when it requests a page, Modal aggressively preloads the entire pages file into page cache as early as they can.

For users, it’s as simple as a setting in your Modal function:

1@app.function(enable_memory_snapshot=True)

2 ...You can read more about how Modal built this here.

VolumeFS

Because building 1 (read-only) filesystem for container images wasn’t hard enough, Modal actually built a second one called VolumeFS. It’s a storage primitive that gives users persistent storage for your Modal functions, across any region, without compromising speed. The original POC was built on NFS, but NFS is only single region, which led to slow read times from containers running in other regions.

This is where the difference between an “AI API” and a full data cloud starts to really matter; VolumeFS allows Modal customers to do things like writing model weights, all without thinking about storage primitives and regions. There might be a fine tuning job running on Modal that writes model weights to a volume, and then you read them back whenever your inference container is running.

Early on, the Modal engineers realized that most of the use cases people had didn’t require strict immediate consistency. Eventual consistency is fine: if you finish a job and write new model weights, they don’t need to be immediately visible to the inference container. And so VolumeFS is eventually consistent, which gave Modal a much more relaxed model to work with.

VolumeFS2 is in the works, and it’s going to be a lot more scalable in terms of how many containers can write to it. This will be huge for distributed training, but even just for writing datasets themselves. For example, the StableDiffusion dataset is literally hundreds of TB, which can take a couple of days to download even with many containers downloading simultaneously.

GPUs and Modal’s multi-cloud routing system

By the time you’re reading this, GPUs might be as readily available as memecoin scams on Twitter. But in the early days of Modal through the time of writing, GPUs were both (a) a highly scarce commodity, and (b) in unbelievably high demand from customers. Sometimes GCP would have capacity when AWS didn’t, and some weeks it was Oracle.

The standard way around this for AI inference providers is deals and partnerships with the major clouds to secure guaranteed GPU capacity, which usually involves committing to one particular cloud provider. Or sometimes, building their own GPU clusters if they’re large enough. Modal did something different.

After slowly figuring out the constraints of the problem via manual, bespoke capacity management, they put together an automated system that allows users to:

- Elastically scale their GPU usage (literally, up to hundreds of GPUs)

- Allocate to GPUs across different clouds efficiently

- Do all of this without users needing to reserve their own cluster

Under the hood, Akshat (Modal CTO) calls this their resource solver. It runs every few seconds, and takes in all of the system constraints:

- All of the workloads Modal has, and their individual region and GPU constraints

- GPUs currently available across clouds in different regions

- The costs of those GPUs

And then solves for where they need to scale up, where they need to scale down, and where they need to consolidate capacity. It’s almost like an autonomous operating system for Modal customers’ GPU constraints. And it factors in individual constraints for a particular workload, e.g. a customer needs their GPUs to be in Ohio so they’re co-located with an S3 bucket and they don’t pay interzone bandwidth fees.

The solver is built using the Google Linear Optimization Package, the bearer of my favorite acronym (GLOP). It has a Python package that you can use to solve linear programming problems. Like much of the ideas that Modal has built on top of, this stuff has been around since the Unix Epoch.

The hard part of this is how many variables there are, from capacity to cost to region. So Modal runs two types of solves: a base solve, which solves for all of the variables at once (slower), and incremental solves, which hold some variables constant (faster). Like a DevOps admin would do for a typical cloud application, Modal is constantly re-running these based on live pricing data and live demand, but at about 1000x the scale.

Today, a big reason why customers come to Modal is that they can scale up to hundreds of GPUs instantly. You don’t need to think about which cloud you’re using at all. You just specify your GPU type (T4, H100, A100-80GB, etc.), and Modal takes care of the rest:

1@app.function(gpu="A100", image=image)

2def run():

3 import torch

4 print(torch.cuda.is_available())

The Modal developer experience: decorators, observability, and more

Most AI inference providers give you an SDK that’s mostly a group of pre-uploaded models and specific functions for working with them. But Modal is a complete data cloud, built for a lot more than just running models: like training, fine tuning, and more complex pipelines. So their developer experience is totally different than just a bunch of APIs. It’s lower level, highly considered, and significantly more flexible than out of the box model providers; and that’s why their customers do a lot more than just inference on it.

The decorator model

The Modal DevEx is built on the decorator paradigm. You essentially annotate your Python code with decorators that tell Modal what you want to put in the cloud and how:

1app = modal.App(name="llm-app")

2

3@app.function()

4def run_llm(model):

5 ...

6

7@app.local_entrypoint()

8def main(url):

9 model = get_model_weights(s3_bucket_url)

10 run_llm

Then a simple modal run filename.py will place and run this code on a container in the cloud in a few seconds.

Decorators were introduced way back in Python 2.4, and they’re basically a function that modifies another function and returns, you guessed it, a function. The reason they’re useful here, though, is that you don’t need to modify your code much to run it on Modal. The Python you were already using to train, fine tune, or deploy a model can now run in the cloud with just a few extra lines.

If you want to expose the container as web endpoint, that’s also just a decorator:

1@modal.web_endpoint()Decorators can also take parameters. For example, if you want run your code as a cron job, you can do that inside of your @app.function() decorator:

1# Using Modal's concept of a Period

2@app.function(schedule=modal.Period(hours=5))

3

4# Using old school cron

5@app.function(schedule=modal.Cron("0 8 * * 1"))And instead of waiting until 12 tonight to realize that it didn’t run, you can use modal deploy to verify that everything is working: not just locally, but on the actual machine it’s going to run on later.

Debugging and making things less serverless

One of Modal’s goals is to make serverless less of a black box: in their minds, you shouldn’t have to choose between self managing hell with great observability, or fully managed paradise without a clue what’s going on behind the scenes. So for a serverless product, they offer a surprisingly rich amount of information as to what’s going on in your Modal container.

For example, you can run a debugger in a fresh instance with the same infrastructure as your production instances with a breakpoint:

1# Start a Modal app that runs an ML model

2@app.function()

3def f():

4 model = expensive_function()

5 # play around with model

6 breakpoint()The breakpoint() function calls the built-in Python debugger, which you can interact with from your Terminal (even though it’s running in a Modal container).

But a fresh instance isn’t always what you want. If you want to debug a live container, you can do that with the modal shell command:

# Connect to a Modal container

modal shell [container-id]

# Then use some preinstalled tools

vim

nano

curl

strace

etc.

The idea here is the Modal takes care of getting your code to a container (or several) and running it, quickly and effortlessly; but once it’s there, you can do whatever you want with it.

Modal sandboxes

Sandboxes are a newer Modal primitive for safely running untrusted code, whether that code comes from LLMs, users, or other third-party sources. One way to think about it is something like a programmatic remote Docker. There’s a simple exec API for running code:

1import modal

2app = modal.App.lookup("sandbox-manager", create_if_missing=True)

3sb = modal.Sandbox.create(app=app)

4

5

6p = sb.exec("python", "-c", "print('hello')")

7print(p.stdout.read())

8sb.terminate()If you ask me, Modal sandboxes are going to be incredibly important as more agents write and run code. Quora uses Modal Sandboxes to power the code execution in Poe, their AI chat platform. When you ask Poe’s AI-powered bots to write and run code, that code executes safely in Modal Sandboxes. They’re completely isolated, meaning the code is kept separate from both the main Quora infrastructure and any other user’s code.

You can read more about Modal sandboxes here.

~

So does Modal help you deploy and run AI in the cloud? Yes. But are they an AI inference company? Absolutely not. From a custom container runtime, to not one but two homegrown file systems, to a linear program solver to handle GPU allocation, they’ve built a lot more than AI inference. Modal is a full data cloud. And as AI workloads and the way companies run them get more and more complex, I think that all of the incredible things they’ve built will get an even wider audience.

You can get started with Modal here (startups get $50K in free credits). And if these kinds of systems and problems sound interesting to you, they’re hiring.