Cancer's 95% problem

Ask a scientist about whether they’re optimistic about curing cancer, and you’ll probably get two answers.

The first is that there’s a lot to be upbeat about. According to the ACS, both cancer incidence rates and death rates have dropped dramatically over the past 20 years, for both men and women. For some of that we have new, more effective drugs to thank. Targeted therapies, immunotherapies, and precision medicine have all gotten better, and there’s a lot more on the way; in some part thanks to breakthroughs in data and AI, there are 150x more targeted cancer therapies in clinical trials than there were 20 years ago.

But more promising leads as there may be, there’s no escaping the troubling fact that 95% of cancer drugs fail to make it past clinical trials. They either don’t work, or work too well and have disastrous side effects. Essentially, we've gotten incredibly good at designing molecules that look promising in the lab, but fail spectacularly when they meet actual human biology. This is because:

- Cancer therapies start by finding a drug that works on a single molecule, a protein.

- But just because it worked on a single molecule, doesn’t mean it will work on the exponentially more complex cell.

- And just because it worked on a single cell, doesn’t mean it will work in an exponentially more complex human, let alone across a population of different humans.

95%! It’s a spectacular number. I can tell you with certainty that if I brought home a test in elementary school that I got a 5 on, heads (my head) would roll. But the human body is so complex, so multifaceted, that even the lowest level of consistency eludes us in cancer drug discovery. Scientists call this the translation problem and if we’re going to make strides in cancer treatment, we’re going to need to solve it.

And yet, most companies today seem to be focusing on the top of the funnel: making it easier, faster, and more efficient to design new molecules. So much of the focus on AI in biotech is fundamentally about scaling up candidate generation. This is critically important work, no doubt…but the fact remains that 95% of these “successes” will still fail to translate to humans. The challenge isn't just creating more drug candidates; it's predicting which ones actually work in patients.

That's where Tahoe Therapeutics comes in. They are solving the 95% problem.

Why drug discovery for cancer therapies is so hard

To fully understand the 95% problem you must understand the wacky, wild world of how we go from a researcher’s idea to something that gets tested – hopefully successfully – on a human being. It looks something like this.

The first step starts at the molecular level: identifying a protein that’s involved in a particular type of cancer (there are hundreds of types of cancer, by the way). Scientists try and design a type of molecule that binds to this protein in such a way that it should, at least theoretically, stop the cancer cell from functioning. To test their hypothesis, they’ll test the new molecule against an isolated protein, seeing if it binds successfully. If it does, they’ve got a “hit.”

Hits in isolated proteins are not like hits in Battleship. After an initial hit, molecules get tweaked and optimized to weed out candidates that aren’t likely to perform well as drugs. Those that survive this gauntlet earn the optimistic title of “lead”. But any excitement about reaching this stage is generally short-lived because the next step up is the cell, and it’s a lot more complicated than an isolated protein. And indeed, of all of these promising protein hits, only 1-2% actually do something useful when tested in cells. That's not a typo. 98-99% of molecules that look great at the protein level completely fail when they encounter the complexity of an actual cell. Actually, it is like Battleship, but for every few squares of ship there are like 1 million squares of ocean.

And remember, the cell isn’t the final boss. Of those 1-2% of hits that indeed do translate to the cellular level, even fewer of them work at the organism level, in most cases a mouse. And of those few that work in mice, most of those still fail in humans. By the time you get to efficacy in a human patient, you're looking at success rates of roughly 1 in 10,000 molecules.

So a hit is not exactly a hit, all things considered. Proteins don't exist in isolation. A drug that perfectly inhibits a target protein in a test tube probably won’t work as advertised when that drug is delivered to a living cell, surrounded by thousands of other molecular interactions.

Moreso, cancer isn't even one disease – or 200, for that matter. When researchers say "colorectal cancer," they're describing hundreds of different biological processes that vary dramatically between patients. The tumor driving cancer in one patient might be completely different from the "same" cancer type in another patient.

It’s hard to avoid the mental image of finding a needle in a haystack. Scientists are essentially running completely blind, hypothesizing and testing at a level of granularity so far removed from real outcomes that the odds are impossibly stacked against them. Through this lens it’s a genuine miracle when we do discover an efficacious new drug.

When you see the full scope of what it takes today to get a cancer drug from molecule to patient, one can’t help but wonder: why are we recruiting more people to look through the haystack instead of figuring out a better way to look?

Turning cancer into a data problem

If you want to find a better way to look through the haystack, you need to treat it like a data problem. What if you could model cells digitally, using computational models to understand how a molecule would impact a cell without manually testing it in a lab? What if you could bypass the ridiculously narrow funnel from molecule to effective treatment, and only test things that actually have a good shot at working? What if you could turn finding cancer drugs into a data problem?

This is the concept of the Virtual Cell. It means a lot of things, the most basic of which is a digital representation of life’s fundamental unit. For decades, teams of scientists have tried with varying degrees of success to build such a model, carefully replicating every element of a cell, from structures to processes and how molecules interact with each other. Markus Covert’s lab painstakingly – manually – were able to (mostly) digitally replicate E. Coli.

To build these digital cells at any sort of scale, you need one thing: data. To be more specific, massive amounts of high-quality data showing how different drugs affect different cell types from diverse patient populations. You need granularity on which cells were affected, compound perturbations, and gene expression profiles. Building a digital cell model is in essence no different from building any other kind of AI model; you need a shit ton of good data to do it.

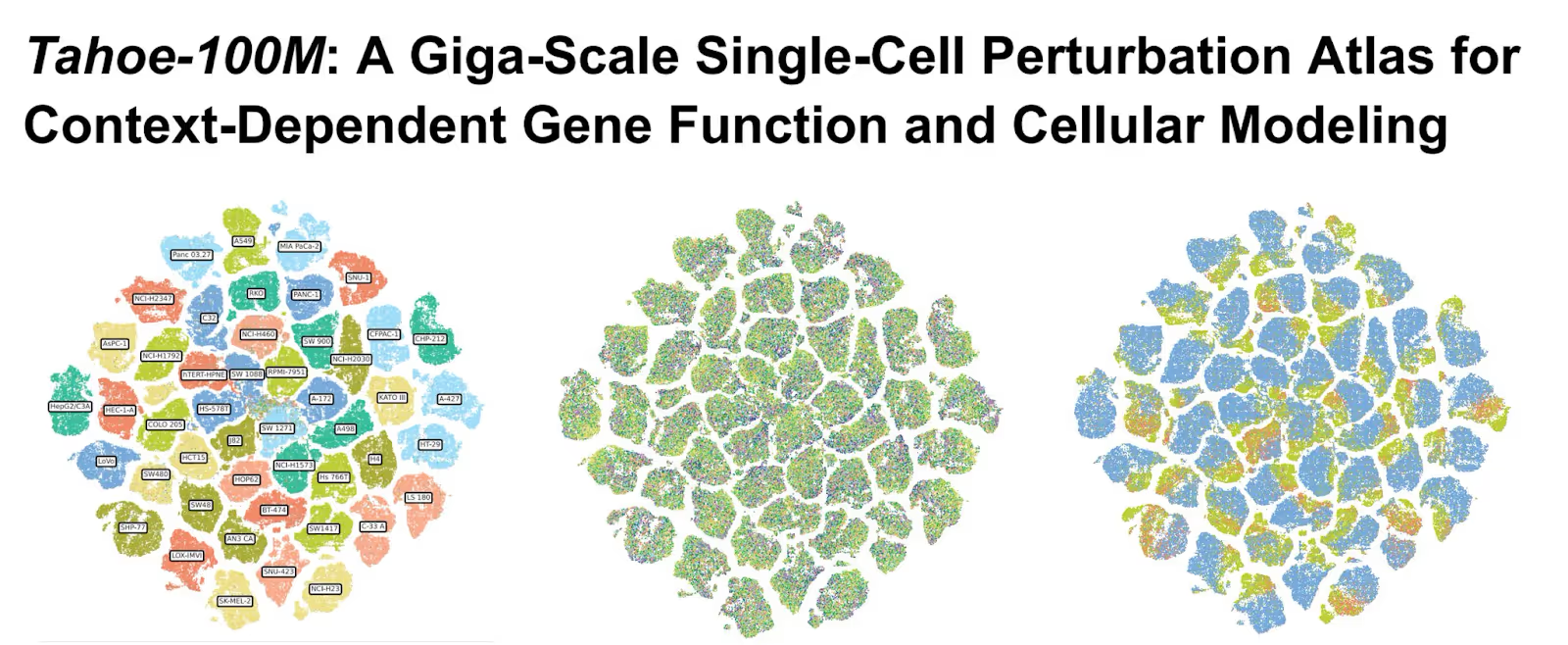

And thanks to Tahoe Therapeutics, we now have a lot more of that data. In February of this year, they released the Tahoe 100-M dataset, a massive, completely open source collection of 100M drug cell interactions and 60,000 drug-patient interactions. To put this scale in context, Tahoe 100-M is 50x larger than all public drug-perturbed data combined. And using AI models trained on this data, Tahoe has already identified promising colorectal cancer drug combinations that could be dramatically more effective than current therapies.

Large Language Models have the entire corpus of the internet. Now, scientists have Tahoe-100M.

The obvious question is: how did they build this thing? To generate a dataset of this size and complexity, Tahoe’s cofounders developed some incredibly novel scientific breakthroughs which, you guessed it, I’m going to tell you all about.

Mosaic, or how to test 100 tumors at the same time

Using traditional 1 by 1 methods, testing enough patients to generate a dataset the size of Tahoe 100M would take decades and billions of dollars.

So instead of testing each patient's cells separately, Tahoe pools cells from diverse cancer patients into what they call a "mosaic tumor." This composite tumor represents a broad spectrum of cancer genetics and can be treated with experimental drugs in either mouse models or sophisticated 3D cell culture systems. Think of it like…a super tumor. But in a good way.

Growing the right amount of a tumor

Creating this super tumor is not as simple as just putting the cells together and hoping for the best. Cancer, at its core, is a disease of growth. And left to their own devices, different types of cancer – and the way they express themselves in patients – will grow at drastically different rates. One of the cells growing too rapidly can easily ruin the experiment by quickly outnumbering the other cancer cells in the group.

Tahoe’s solution is called growth balancing. They run iterative experiments to figure out how fast different cell types grow, then mix them at the beginning in proportions inversely related to their growth rates. This is, of course, easier said than done, and took Tahoe cofounders Johnny Yu and Hani Goodarzi six years to develop at UCSF, and then another two years to scale it 100x at Tahoe. You can read about the specifics of their approach in their paper.

Disentangling cause and effect: barcoding without barcoding

Controlling growth rates is only half of the problem. Because after you’ve assembled your super tumor, you then hit it with a drug and need to analyze what exactly happened. With a single tumor, this is easy – most of the cells should react the same. But how do you measure each individual cell’s different reaction in this multi-cell amalgamation? For this to work, you have to trace every single cell back to its original patient to understand the before-and-after picture of each treatment.

Most labs solve this through what’s called artificial barcoding. They manually insert unique nucleic acid sequences (barcodes) into each cell before the experiment. But this approach has serious limitations: it's unscalable, can alter cellular behavior, and doesn't work in complex mouse models.

Tahoe also uses barcodes, but not artificial ones. Their research discovered natural genetic barcodes: patterns within the cells that remain stable during drug treatment but are unique to each patient. Using AI models, they can map every cell back to its original donor without any artificial modification. You can read about this technique in more detail in the aforementioned paper.

With these natural genetic barcodes, Tahoe can test a single drug against cells from hundreds of patients in a single experiment, then trace exactly how that drug affected each cell from each patient, down to the level of individual genes. And thus, Tahoe-100M is born.

There’s also some impressive engineering work behind the scenes. When the company started, each cell in their experiments cost about $1.50 to analyze. Through relentless optimization, they've reduced that cost to roughly $0.02 per cell.

OK, but tell me about the drugs

The Tahoe 100-M data set is completely open source (you can download it on HuggingFace). Anyone is free to build and train their own models on it, and some larger pharma companies and AI labs are already doing so. But Tahoe is also building their own. Using Tahoe-100M and models trained on the 100-M dataset, they've identified drug combinations that effectively target 40% of colorectal cancer patients—a dramatically larger population than the 10-15% typically addressed by approved drugs.

What made this discovery particularly interesting is that it contradicted the intuitions of veteran cancer researchers. The patient subgroup Tahoe identified was defined by biomarkers that experts had overlooked. Some researchers had even run clinical trials using different criteria to select patients, missing the more effective approach revealed by Tahoe's data.

This points to something important: human intuition, shaped by decades of experience with limited datasets, might be systematically missing patterns that become visible only with sufficient scale and resolution. The ML models aren't just finding needles in haystacks. They're telling us that we've been looking in the wrong haystacks entirely.

Meanwhile, Tahoe plans to scale this approach dramatically. Their next milestone is generating 1 billion cellular data points by the end of 2026, focused on clinically relevant insights that can directly inform trial design. And there’s a flywheel effect here too: as more researchers build virtual cell models using Tahoe's infrastructure, the platform becomes more valuable, attracting additional data and computational resources. They're already collaborating with leading AI researchers at organizations like NVIDIA and the Arc Institute.

Making a dent in cancer’s 95% problem

Curing cancer needs to become a data problem. For decades, we've been making our most critical decisions – which drugs to advance, which patients to treat – based on limited, low-resolution data and intuition. Most of the work researchers do on isolated proteins doesn’t lead to anything real. And though we’re scaling up molecule generation, the 95% problem remains.

Tahoe is building the infrastructure to change that. They're creating comprehensive, high-resolution maps of how drugs interact with human biology across diverse patient populations, and using these insights to develop drugs with far better odds of translating into effective therapies.

Leading Tahoe’s Series A and $30 million of new capital

Yesterday, Tahoe announced a $30 million financing led by Amplify. We are beyond proud to support Tahoe’s founders on their mission to chart a new course for precision medicine, and glad to be working as part of a powerful investor syndicate that includes Wing Venture Capital, General Catalyst, Civilization Ventures, Conviction, Mubadala Ventures, Databricks Ventures, and AIX Ventures.

Amplify has been supporting researchers, engineers, and scientists working at the intersection of life sciences and AI for over a decade. Tahoe’s cofounders Nima Alidoust, Johnny Yu, Hani Goodarzi, and Kevan Shokat are a natural addition to the Amplify Bio family.